In a cluttered open-plan workplace in Mountain View, California, a tall and slender wheeled robotic has been busy enjoying tour information and casual workplace helper—because of a giant language mannequin improve, Google DeepMind revealed at the moment. The robotic makes use of the most recent model of Google’s Gemini giant language mannequin to each parse instructions and discover its method round.

When advised by a human “Find me somewhere to write,” for example, the robotic dutifully trundles off, main the individual to a pristine whiteboard positioned someplace within the constructing.

Gemini’s capability to deal with video and textual content—along with its capability to ingest giant quantities of info within the type of beforehand recorded video excursions of the workplace—permits the “Google helper” robotic to make sense of its setting and navigate appropriately when given instructions that require some commonsense reasoning. The robotic combines Gemini with an algorithm that generates particular actions for the robotic to take, similar to turning, in response to instructions and what it sees in entrance of it.

When Gemini was launched in December, Demis Hassabis, CEO of Google DeepMind, advised WIRED that its multimodal capabilities would possible unlock new robotic skills. He added that the corporate’s researchers had been arduous at work testing the robotic potential of the mannequin.

In a new paper outlining the mission, the researchers behind the work say that their robotic proved to be as much as 90 % dependable at navigating, even when given difficult instructions similar to “Where did I leave my coaster?” DeepMind’s system “has significantly improved the naturalness of human-robot interaction, and greatly increased the robot usability,” the staff writes.

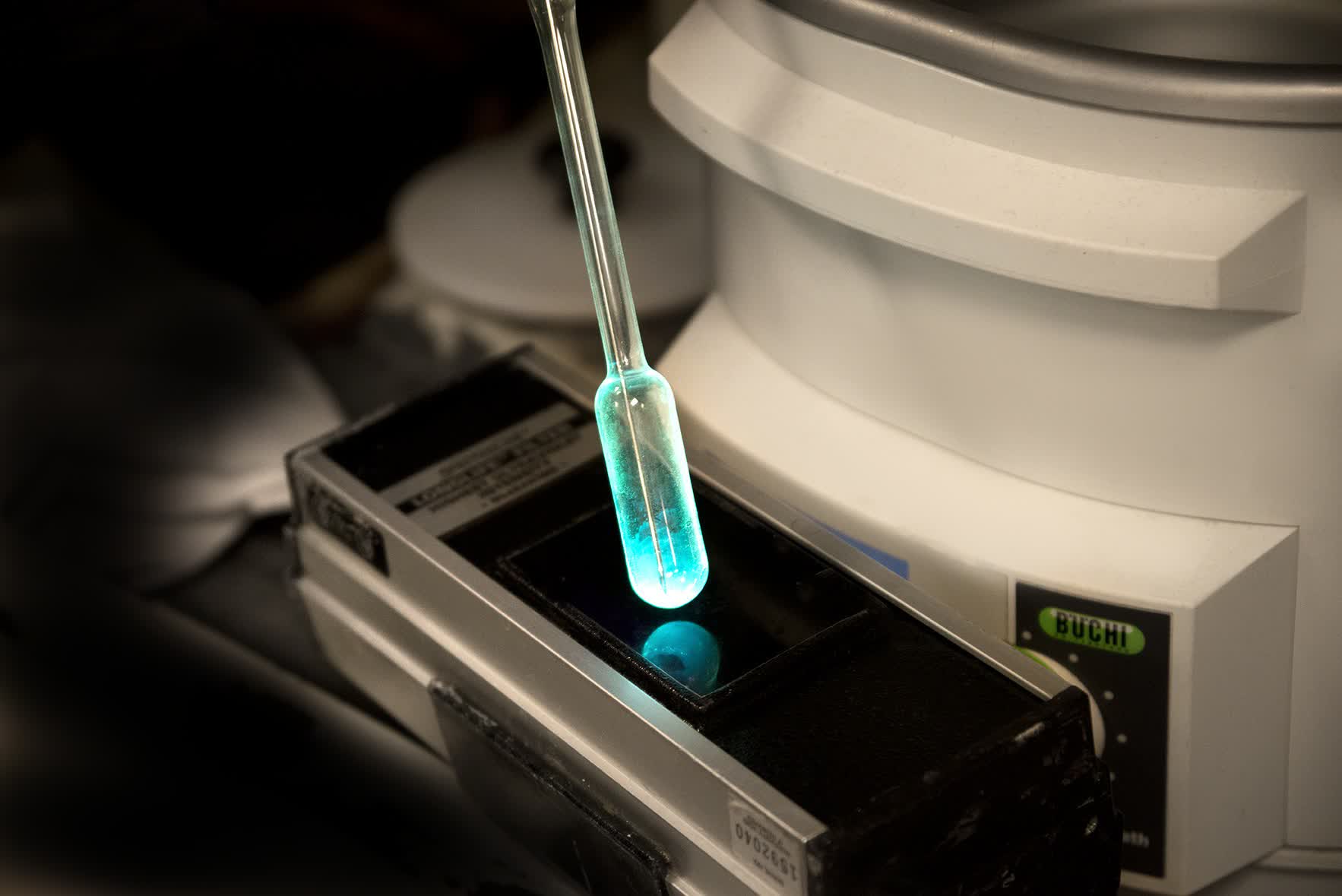

Courtesy of Google DeepMind

Photograph: Muinat Abdul; Google DeepMind

The demo neatly illustrates the potential for big language fashions to achieve into the bodily world and do helpful work. Gemini and different chatbots largely function inside the confines of a net browser or app, though they’re more and more in a position to deal with visible and auditory enter, as each Google and OpenAI have demonstrated just lately. In May, Hassabis confirmed off an upgraded model of Gemini succesful of making sense of an workplace structure as seen by a smartphone digital camera.

Academic and business analysis labs are racing to see how language fashions is perhaps used to boost robots’ skills. The May program for the International Conference on Robotics and Automation, a fashionable occasion for robotics researchers, lists nearly two dozen papers that contain use of imaginative and prescient language fashions.

Investors are pouring cash into startups aiming to use advances in AI to robotics. Several of the researchers concerned with the Google mission have since left the corporate to discovered a startup referred to as Physical Intelligence, which obtained an preliminary $70 million in funding; it’s working to mix giant language fashions with real-world coaching to offer robots common problem-solving skills. Skild AI, based by roboticists at Carnegie Mellon University, has a comparable aim. This month it introduced $300 million in funding.

Just a few years in the past, a robotic would wish a map of its setting and thoroughly chosen instructions to navigate efficiently. Large language fashions comprise helpful details about the bodily world, and newer variations which might be skilled on pictures and video in addition to textual content, generally known as imaginative and prescient language fashions, can reply questions that require notion. Gemini permits Google’s robotic to parse visible directions in addition to spoken ones, following a sketch on a whiteboard that reveals a path to a new vacation spot.

In their paper, the researchers say they plan to check the system on completely different sorts of robots. They add that Gemini ought to have the ability to make sense of extra advanced questions, similar to “Do they have my favorite drink today?” from a person with a lot of empty Coke cans on their desk.