From faux photos of battle to superstar hoaxes, synthetic intelligence expertise has spawned new types of reality-warping misinformation on-line. New evaluation co-authored by Google researchers shows simply how rapidly the drawback has grown.

The research, co-authored by researchers from Google, Duke University and several other fact-checking and media organizations, was revealed in a preprint final week. The paper introduces a large new dataset of misinformation going again to 1995 that was fact-checked by web sites like Snopes.

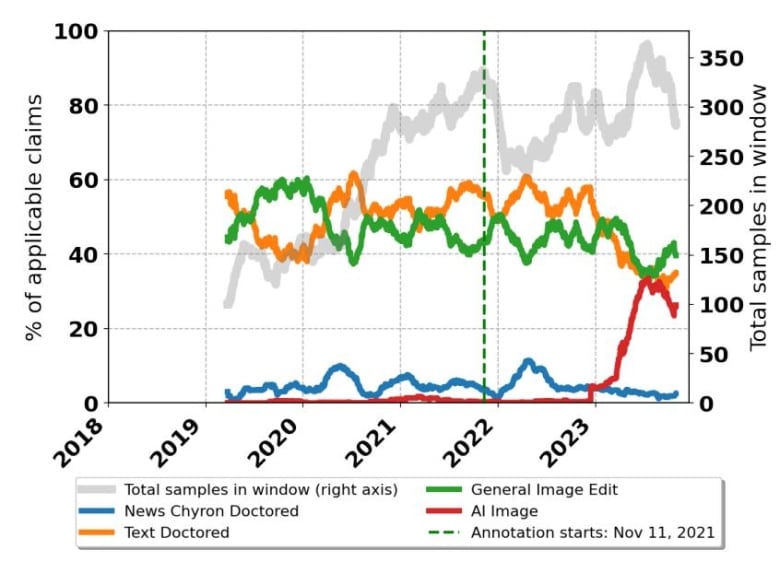

According to the researchers, the information reveals that AI-generated photos have rapidly risen in prominence, changing into practically as fashionable as extra conventional types of manipulation.

The work was first reported by 404 Media after being noticed by the Faked Up e-newsletter, and it clearly shows that “AI-generated images made up a minute proportion of content manipulations overall until early last year,” the researchers wrote.

Last yr noticed the launch of new AI image-generation instruments by main gamers in tech, together with OpenAI, Microsoft and Google itself. Now, AI-generated misinformation is “nearly as common as text and general content manipulations,” the paper stated.

The researchers word that the uptick in fact-checking AI photos coincided with a normal wave of AI hype, which can have led web sites to give attention to the expertise. The dataset shows that fact-checking AI has slowed down in latest months, with conventional textual content and picture manipulation seeing a rise.

The research checked out different types of media, too, and located that video hoaxes now make up roughly 60 per cent of all fact-checked claims that embody media.

That does not imply AI-generated misinformation has slowed down, stated Sasha Luccioni, a number one AI ethics researcher at machine studying platform Hugging Face.

“Personally, I feel like this is because there are so many [examples of AI misinformation] that it’s hard to keep track!” Luccioni stated in an electronic mail. “I see them regularly myself, even outside of social media, in advertising, for instance.”

AI has been used to generate faux photos of actual individuals, with regarding results. For instance, faux nude photos of Taylor Swift circulated earlier this yr. 404 Media reported that the software used to create the photos was Microsoft’s AI-generation software program, which it licenses from ChatGPT maker OpenAI — prompting the tech big to shut a loophole permitting the photos to be generated.

The expertise has additionally fooled individuals in additional innocuous methods. Recent faux photographs displaying Katy Perry attending the Met Gala in New York — in actuality, she by no means did — fooled observers on social media and even the star’s personal dad and mom.

The rise of AI has brought about complications for social media firms and Google itself. Fake superstar photos have been featured prominently in Google picture search leads to the previous, due to Search engine marketing-driven content material farms. Using AI to govern search outcomes is in opposition to Google’s insurance policies.

Fake, AI-generated sexually express photos of Taylor Swift have been feverishly shared on social media till X took them down after 17 hours. But many victims of the rising pattern lack the means, clout and legal guidelines to perform the similar factor.

Google spokespeople weren’t instantly out there for remark. Previously, a spokesperson instructed expertise information outlet Motherboard that “when we find instances where low-quality content is ranking highly, we build scalable solutions that improve the results not for just one search, but for a range of queries.”

To cope with the drawback of AI fakes, Google has launched such initiatives as digital watermarking, which flags AI-generated photos as faux with a mark that’s invisible to the human eye. The firm, together with Microsoft, Intel and Adobe, is additionally exploring giving creators the choice so as to add a visual watermark to AI-generated photos.

“I think if Big Tech companies collaborated on a standard of AI watermarks, that would definitely help the field as a whole at this point,” Luccioni stated.

.jpg)