In early June, Apple researchers shared a study that shines a light on how AI reasoning models work. They looked at popular models like OpenAI’s o1 and o3, alongside DeepSeek-R1 and Claude 3.7 Sonnet Thinking. Their findings showed that, when these models faced new problems that needed systematic thinking, they often relied on pattern-matching rather than real reasoning. This echoes findings from the United States Mathematical Olympiad (USAMO), where these models scored low on new mathematical challenges.

The study, titled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity,” was led by Parshin Shojaee and Iman Mirzadeh, with contributions from other researchers like Keivan Alizadeh and Maxwell Horton. They explored what they call “large reasoning models” (LRMs). These models aim to mimic logical thought by producing text that guides us through problem-solving in a step-by-step manner.

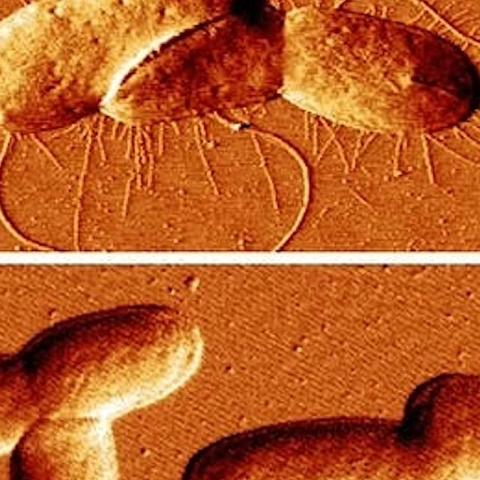

The researchers tested the AI models using classic puzzles, such as the Tower of Hanoi and river crossing challenges. They adjusted the complexity, starting with easy versions and moving to much harder ones. For instance, they used a one-disk Tower of Hanoi puzzle, then escalated to a challenging 20-disk variant requiring over a million moves.

Credit: Apple

The researchers noted that most tests today focus on whether models provide the right answers, particularly in math or coding benchmarks. However, they don’t assess if the models truly reasoned through problems or merely matched patterns they learned from their training data.

Once again, the study confirmed the USAMO’s findings, showing that many models scored less than 5% on new mathematical proofs. Only one model managed to hit 25%, and none could deliver a perfect proof in nearly 200 tries. Both studies highlighted a sharp drop in performance for problems requiring thorough systematic reasoning.

The implications of this research are significant. According to AI expert Dr. Emma Richardson, “Understanding the limits of AI reasoning is crucial. If models can’t truly think through problems, we need to adjust our expectations and applications.” This viewpoint reflects a growing skepticism in the tech community about relying too heavily on automated reasoning.

Recent surveys show that public trust in AI’s reasoning abilities is fluctuating. A 2023 Gallup poll indicated that only 40% of respondents felt comfortable using AI for complex problem-solving, down from 55% the previous year. This shift suggests that, as people become more aware of AI’s limitations, they’re reconsidering its role in decision-making processes.

In summary, while AI models are advancing, the ability to reason through novel problems is still underdeveloped. This research encourages us to rethink how we evaluate and utilize AI, ensuring we understand its capabilities and limitations.